Kling Avatar 2.0

Explore Kling Avatar 2.0 Features

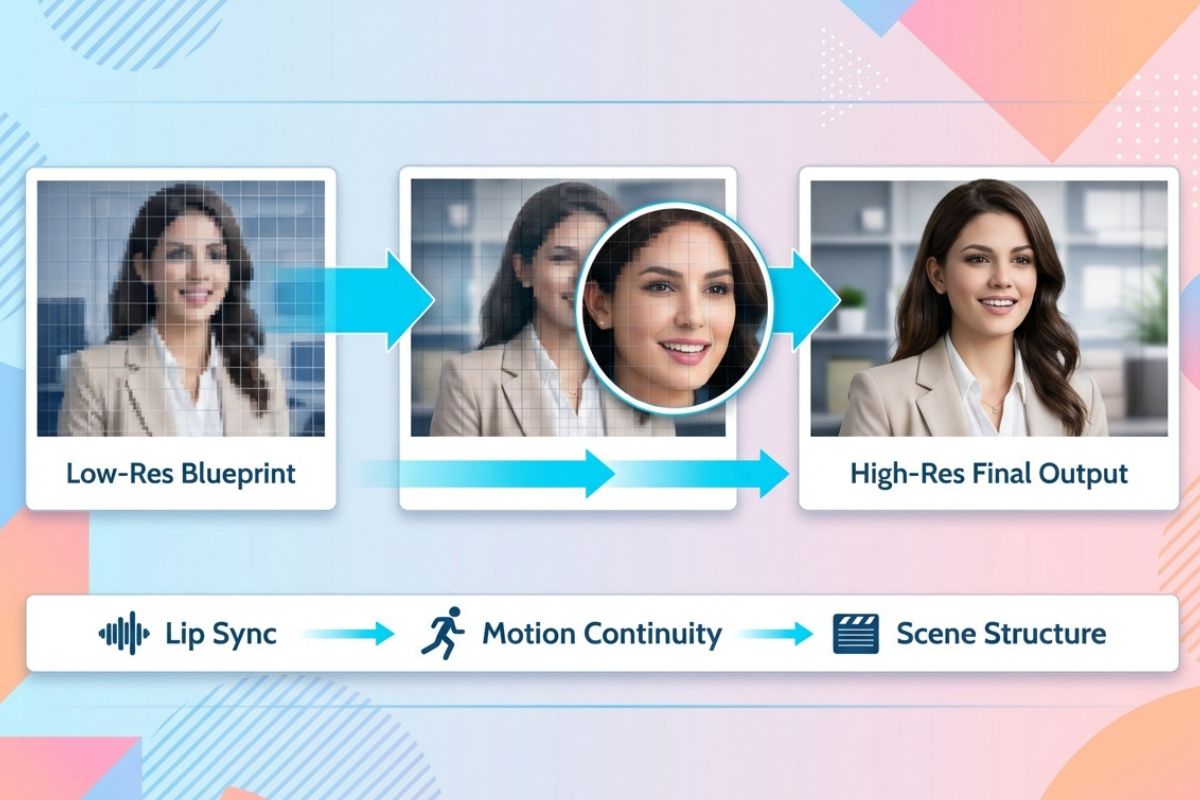

Spatio-Temporal Cascade

Kling avatar 2.0 uses a low-resolution “blueprint” plus progressive refinement to produce multi-minute, time-coherent videos without identity drift.

This cascade approach preserves lip sync, motion continuity, and scene structure, making it reliable for long-form explainers, tutorials, and branded content.

Co-Reasoning Director

The Co-Reasoning Director in Kling AI coordinates audio, visual, and text experts to turn vague instructions into shot-level plans and emotional nuance.

Built on advances from Kling 2.6, it iteratively resolves modality conflicts and produces coherent, context-aware performances.

Identity-Aware Multi-Actor Control

Character-specific mask prediction and multi-stream audio driving keep each avatar’s voice, gaze, and mouth movements independent, avoiding cross-talk artifacts.

Paired with AI avatar generators with a rich template library, teams can rapidly assemble polished multi-character scenes with consistent styling.

Efficient Production & Deployment

Trajectory-preserving distillation and per-shot negative guidance cut inference cost while retaining visual fidelity, enabling production workflows at scale.

The result is affordable AI avatar solutions for bloggers and vloggers delivered through platforms like OCMaker AI.

Key Features of Kling Avatar 2.0

Long-form Video Support

Enhanced Text Understanding & Instruction Execution

More Natural Emotional Expression & Facial Detail

Improved Motion Coordination & Physical Realism

High-quality Multi-character Training & Generalization

Stronger Controllability with Negative Guidance

How To Use Kling Avatar 2.0?

Upload Your Reference Image

Start by uploading a reference photo to establish the visual style, character look, or brand identity for your Kling Avatar 2.0 video.

Enter Your Prompt

Write a prompt to define the scene, action, and mood. Include details like emotion, pacing, camera style, or dialogue direction for the avatar performance.

Adjust Settings and Generate

Set output options such as resolution, aspect ratio, and length, then click Generate. Review the result, fine-tune if needed, and export your final Kling Avatar 2.0 video.